This was it. The last chance in my lifetime to see Venus pass in front of the Sun; and the last chance to measure, the old fashioned way, the distance from the Earth the Sun. The distance from the earth to the sun, called the AU, is the basic unit of astronomy. This blog entry documents my process, the assumptions I made, and the rewarding feeling of witnessing one of nature's most amazing events.

It had been a busy week, and I hadn't had time to prepare. On the morning of June 5th, 2012 I scrounged through the garage for what I might need. First the binoculars. I didn't know this, but there's a cap on the front of many binoculars that pops off to reveal a hole, tapped for a 1/4-20 screw.

Next I found a screw, a couple nuts, and an angle bracket to mount the binoculars onto the tripod.

The rest was done with duct tape, cardboard, and paper. A screen was made from cardboard and paper onto which the image would be projected. Then a shield was made to shade the screen and to cover one side of the binoculars. A paper flap was added to temporarily cover the other side of the binoculars so the intensity of the sun's rays wouldn't damage the binocular's optics between viewings.

I brought the whole rig down to CSU Sacramento, where the staff of the observatory on top of the psychology building was offering views to the public. I figured I could set my rig on a corner of the roof so I could take my measurements and see the transit with their equipment too. Unfortunately, it was way too crowded there and I set up behind the building.

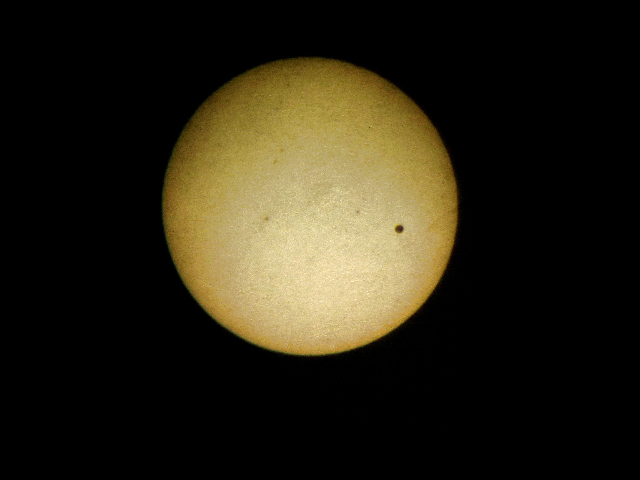

All in all, the image wasn't too bad, if I do say so myself. Solar North is to the right and East on top top, I believe. The image needs needs to be flipped and rotated 90 degrees to match the image from a direct view. Venus can clearly be seen, and you can just make out three groups of sunspots. My first goal was accomplished. I had seen the transit.

The other goal - measuring the AU - was next. I wanted to do this as independently as possible. I didn't want to use any primary measurements from others. Of course to do that I would have to be at two places at the same time because measurement of the AU is done using parallax. By definition, I had to use measurements from someone else at a different latitude. Transit of Venus data from the Mauna Kea was readily available, so I used that. Most of of the measurements were made with my camera, and the clock inside the camera. The only necessary measurements I looked up on Wikipedia were the angular diameter of the sun, and the circumference of the earth. The angular diameter of the sun can be calculated with a pin-hole viewer, and some day I'll do that. I once saw a PBS documentary in which a team used a compass, a protractor, and a moving van to measure the size of the earth. Someday I'll use the same technique, and adjust my results accordingly.Credit Kepler.

Across much of the world, this transit can be measured and the AU calculated by documenting the times at which Venus enters and exits the disk of the sun. Unfortunately, in most of the western US, the sun set before Venus exited. My plan was to take a series of pictures and using the time at which the pictures were taken, plot a line, and calculate how long the transit would have taken had I been able to see the whole thing.

After several hours of observing and photographing the transit, I downloaded the pictures. The first problem I noticed was that the sun as all different sizes and not even round in many cases. This is because I took all the pictures hand-held and off-angle. The solution was to crop all the images to edge of the sun and resize them to 1000x1000 pixels. I guessed, correctly, that the sun might be rotated from picture to picture. The photos could be aligned using the sunspots as a guide. I using the cursor on my photo editor entered the coordinates of Venus, the coordinates of the three sunspots, and the time-stamp on the photos into a spreadsheet. The sunspot I called "c" was the most easily measured so I used that for my calculations.

| time |

c x |

c y |

venus x |

venus y |

| 143123 |

310 |

561 |

720 |

918 |

| 143727 |

312 |

526 |

606 |

944 |

| 143758 |

316 |

524 |

618 |

938 |

| 144943 |

339 |

522 |

550 |

940 |

| 151807 |

348 |

554 |

734 |

826 |

| 153106 |

356 |

531 |

752 |

788 |

| 153153 |

356 |

550 |

741 |

796 |

| 153930 |

360 |

568 |

780 |

758 |

| 153941 |

358 |

573 |

772 |

764 |

| 154020 |

351 |

543 |

746 |

765 |

| 154630 |

356 |

578 |

729 |

790 |

| 154725 |

358 |

592 |

753 |

795 |

| 155102 |

369 |

591 |

780 |

736 |

| 155113 |

375 |

609 |

788 |

738 |

| 155454 |

362 |

576 |

764 |

758 |

| 155551 |

357 |

544 |

768 |

728 |

| 170411 |

332 |

490 |

710 |

669 |

| 171348 |

336 |

531 |

728 |

670 |

| 173544 |

334 |

528 |

759 |

628 |

| 175132 |

360 |

506 |

788 |

585 |

| 180101 |

351 |

525 |

789 |

578 |

| 180114 |

357 |

530 |

796 |

566 |

| 180135 |

354 |

538 |

801 |

562 |

| 180216 |

352 |

537 |

792 |

598 |

| 180632 |

354 |

540 |

798 |

573 |

The position of the raw points (blue squares) were aligned (green triangles) by rotating them about the center of the sun according to the angular difference of the position of sunspot c compared to the average of position of sunspot c. Then a best-fit line was calculated for the green triangles. I didn't know it a the time, but not knowing the the exact position of the sun's north pole would be a contributor to the error in my measurement. If at some time in the future, I can locate the angle between sunspot c and solar north I can make that correction.

In the photo below, the average radius of c in pixels is 161 pixels and the average angle is 197 degrees.

Knowing the path in pixels isn't very useful, so the scale needed to be converted to arc-seconds. Since my pictures have a radius of 500 pixels and the sun has a radius of 960 arc-seconds the path of Venus across the sun can be described as as

y = 2.34x - 1510

To get the length of the transit, we need to solve for the intersection with the intersection of the sun.

x^2 + y^ = 960^2

I won't write all the steps here because I haven't figured out how to use a decent equation editor, so for now I'll just tell you that I calculated the transit length to be 1477 arc seconds in Sacramento.

To calculate parallax, I needed to convert the transit time from Mauna Kea from seconds to arc-seconds. To do this, I needed to know the speed of Venus across the face of the sun in arc-seconds per second. I thought this would be easy. I just needed to average the change in position over the time between observations for every one of the photos. This number turned out to be way off, and I couldn't get any reasonable numbers by taking a subset of consistent looking observations.

It didn't help that I had moved 15 miles north - you can see the gap in the data - mid-way through the observation period. I was surprised that it made a difference.

In the end, I just used a ruler to measure the distance between the majority of the points and divided by my total observation time. This enabled my to convert the documented Mauna Kea transit time of 22500 seconds to 1641 arc-seconds.

I could have calculated the position and orientation of the Mauna Kea transit by solving for a line 1641 arc-seconds in length with a slope of 2.34, but I got impatient. I got out my ruler again and drew a line of that length, parallel to my observations. At this point I realized that perhaps parallax was not the perpendicular line between the two paths, but the north-south distance. My photos were aligned only roughly with the north pole and the parallax angle can vary wildly if the pole is not aligned. The number I came up with was 141 arc-seconds of parallax between Sacramento and Mauna Kea. This is way too big; which meant that the path of Venus across the sun was much more perpendicular to the poles than my photos showed.

I discovered an even more serious error. I realized that since Mauna Kea is to the south of Sacramento, parallax should project the transit line to the north. Since the transit was projected on the sun's northern hemisphere, it means that my calculated transit time should have been greater than Mauna Kea's.

For now there was nothing to do but forge ahead, even with bad numbers - just to complete the exercise. I applied the equations from the

Exploratorium's ToV web site. I should mention here that the Exploratorium was my favorite place in the world when I was a kid.

We need to know the N-S distance from Sacramento to Mauna Kea.

The radius of the earth is 12.7 km.

The latitude of Sacramento is 38.6 degrees.

The latitude of Mauna Kea is 19.8 degrees.

The distance between the two locations should be:

12,700km*(sin(38.6) - sin(19.8)) = 3,620 km

Using the Exploratorium equations:

E = the parallax angle.

V = E/0.72 = 54,000

D

a-b = the distance North to South from the two viewing locations.

D

e-v = the distance from Earth to Venus.

D

e-s = the distance from Earth to the Sun.

D

e-v = (0.5*D

a-b)/tan(V) = 19,200,000 km

D

e-s = D

e-s/0.28 = 68,600,000 km

If you look it up, the correct answer is 150,000,000 km. My measurements and calculations yielded a result less that half of the true value!

Now it's time to call in some help from the professionals at

NASA.

From this the diagram, I realized that I should be using the Earth's ecliptic rather than the Sun's poles to determine parallax. I could see that the difference between the Earth's ecliptic and the path of Venus was only 9 degrees, rather than the 24 degrees that i got from my photos. I could also tell from the graph that the velocity of Venus across the face of the sun which enabled my to calculate that the path across the sun was 1517 arc-seconds at Mauna Kea. The difference is less than the uncertainty in my measurement!

I'm not too disappointed, though. Even the heroic 1769 expedition of James Cook and Joseph Banks failed to return usable data, so I am in good company. It was a fun project and I got to see the orbital mechanics of our solar system in action!

I also have some ideas salvaging my data. Perhaps I can get some data from my move north in the middle of my data collection. The data clearly shows the projected path moving south after I moved north. At least the data moved in the right direction!

Data from Australia shows a projection to the north of the projection I observed, so hopefully, the increased distance can reduce the relative error of my measurements. This site may yield data I can use

Astronomical Association of Queensland (AAQ)

It lists predicted values rather than measured values, but I may use it in a pinch.

I also located measured data from

S. Bolton in Canberra. In a later post I'll apply this data and re-evaluate my results!

| Canberra -35.230167 latitude |

Time in seconds |

avg entry and exit |

duration |

| 22:16:41 |

80201 |

80742 |

0 |

| 22:34:43 |

81283 |

|

22182 |

| 04:26:46 |

102406 |

102924 |

|

| 04:44:02 |

103442 |

|

|